Learn BERT - essential NLP algorithm by Google

Why take this course?

🚀 Embark on Your NLP Adventure with BERT! 🤖

Course Title: Learn BERT - Most Powerful NLP Algorithm by Google 🧠✍️

Instructor: Martin Jocqueviel 🏫👩🏫

Course Description:

Are you ready to unlock the secrets of natural language processing (NLP) with the most advanced algorithm on the block? Look no further! In this comprehensive course, "Learn BERT - Most Powerful NLP Algorithm by Google," you'll embark on a journey through the intricate world of NLP and emerge an expert in applying Google's groundbreaking BERT model.

Why Take This Course?

-

Historical Perspective & Solid Foundation: We start with the history and evolution of BERT, ensuring that even novices can grasp the fundamental concepts and build up to mastering this powerful tool. 📜🚀

-

Industry-Disruptive Techniques: Say goodbye to traditional deep learning models like RNNs and CNNs! BERT introduces a more intuitive approach to language understanding, which is both efficient and effective for a multitude of NLP tasks. 🛠️✨

-

Real-World Applications: You'll not only understand BERT but also apply it practically. This course includes hands-on projects where you'll build two real-world NLP applications! 🌐🔧

Course Highlights:

-

For Everyone: Whether you're a beginner or an experienced practitioner in the field of NLP, this course is designed to be accessible and enlightening. We promise to clarify any concept so that you can master BERT from scratch! 🤗

-

State-of-the-Art Tools & Technologies: Leverage TensorFlow 2.0 and Google Colab to ensure you're using the latest technologies available, with no worries about software version compatibility or local machine constraints. 🛠️✨

-

Hands-On Learning: With practical assignments and interactive exercises, you'll gain firsthand experience applying BERT to real-world NLP tasks. 🖥️💻

What You Will Learn:

- The history and development of BERT, and why it's a game-changer in NLP.

- Core concepts behind BERT and how it differs from previous models.

- Practical skills to apply BERT in various NLP applications, including sentiment analysis and question answering.

- How to implement BERT using TensorFlow 2.0 and Google Colab without the hassle of complex local setups.

- The ability to troubleshoot and optimize your BERT model for better performance on NLP tasks.

Join us now and be part of the NLP revolution with BERT! 🌟📚

Enroll today and transform your approach to natural language processing. Whether you're aiming to enhance your skills, expand your knowledge, or simply satisfy your curiosity about this remarkable algorithm, this course is your gateway to mastery in NLP with BERT. 🚀🌐

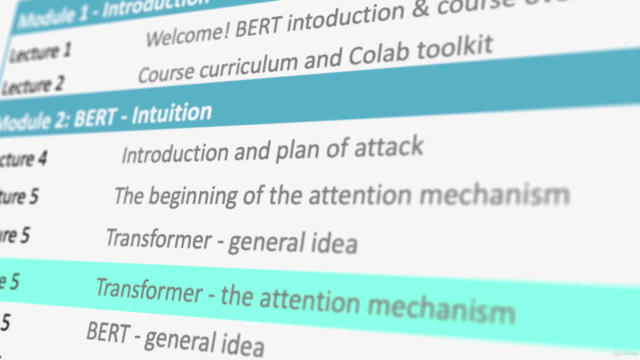

Course Gallery

Loading charts...

Comidoc Review

Our Verdict

This course offers a solid introduction to BERT and how it has revolutionized NLP. It covers essential topics like tokenizing text data, creating NLP applications, and implementing the BERT layer as an embedding in your models. However, aspiring learners should be prepared for uneven complexity levels in explanations and limited practical application of fine-tuning. The course could benefit from incorporating more visual elements to aid in understanding complex theories and reducing reliance on slides.

What We Liked

- Covers the history and reasoning behind BERT's impact on NLP

- Introduction to tokenizing tools and using BERT layer as an embedding

- Hands-on experience in building two NLP applications using BERT

- Detailed explanation of word embeddings

Potential Drawbacks

- Lack of live coding & visuals, making it hard for some students to follow

- Theoretical explanations could be denser with added visualizations

- Limited practical exploration of fine-tuning on specific tasks

- Uneven explanation complexity hampers some students' understanding